Understanding Asynchronous Queueing pattern

When working in the cloud and our projects start to grow with multiple services, instances, microservices, autoscalers and much more, decoupling the different agents in our architecture can be really handy.

Asynchronous queueing is a great architecture pattern to leverage that. It adds asynchroniciity to the architecture decoupling different agents, and bringing the possibility of enhance the robustness and scalability of the project architecture. Of course some trade-offs have to be made as well.

These days I was playing around with SAP Event Mesh. The SAP BTP service that makes it very easy to implement event messaging using WebHooks, MQTT, AMQP or HTTP protocols. Combined with SAP CAP, the configuration boilerplate is extreamly simple, and the learnings deserve some quick posts.

I am going to elaborate on this topic in 3 parts

- Understanding Asynchronous Queueing pattern (this post)

- Leverage event messaging with SAP Event Mesh and SAP CAP

- Consuming CAP services with UI5 via WebSockets (in progress...)

- Autoscaling based on queue size in SAP BTP (in progress...)

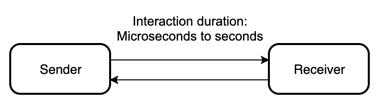

Synchronous vs Asynchronous

Synchronous

- Sender sends request and wait for an immediate response.

- Might be async I/O processing in the server, but the logical thread or channel is preserved.

- Correlation between request and reply is tipically implicit, by order of requests.

- Errors, exceptions, etc. tipically flow back on the same path.

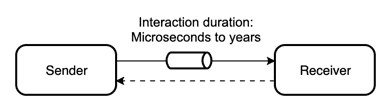

Asynchronous

- Sender sends a message and then proceeds to do other things. It does not wait for anything.

- Replies (if there are any) might flow back in a separate path.

- Messages might be stored by intermediaries.

- Message delivery might be attempted multiple times.

- High decoupling of sender and receiver.

Diagram

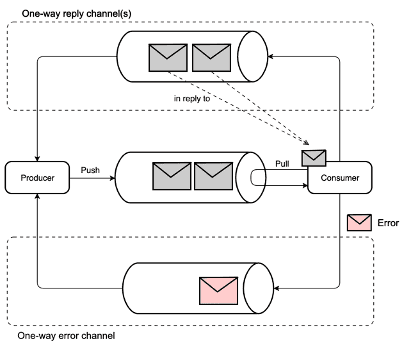

The architecture include queues in the middle of the sender and receiver.

Queues are storing units. They store messages that sent by the sender, and messages wait in the queue to be picked by the receiver.

The Sender just send the message to the corresponding queue and closes the connection. It trusts the system. The message was stored and now it is waiting to be attended. Sender don't know and don't care which specific Receiver will attend that request. Here the decoupling of both.

The Receiver is listening for messages in a spefific queue. Once a message arrive, if the receiver is not busy, it attends the incoming message. Once finish processing, if a response is needed, it pushes it to a response queue, trusting that whoever is interested in that response, will be listening in that queue. The Receiver don't know and don't care who sent the request to the incoming queue, and don't know and don't care about who will be listening for the response (outcome) generated after processing.

One Way Communication

REST calls:

We usually get an immediate response. We know the outcome of an operacion / function call.

Messaging / event oriented services:

We have to trust the middleware to not lose the sent messages and then leave it negotiating with the receiver/s to process those messages and generate outcomes.

- Asynchronous flows are generally one-way.

- The producer gets no initial answer other than the message having been accepted into the queue/buffer.

- Reply and error channels are explicit. Decoupled from send channels.

- Correlation is flexible. Multiple replies may refer to one message. One message can be distributed to many systems and then collect answers from all of them.

Problems this pattern solves

Performance and reliability issues of sync communication

- Long running tasks can be processed in parallel and multiple servers increasing performance.

- No need for connections to be alive for long time, reducing risks of losing the connection due to timeouts or other network issues.

- Producer gets released after sending the message and can work in other tasks while processing.

- A failing task can be retried multiple times increasing robustness.

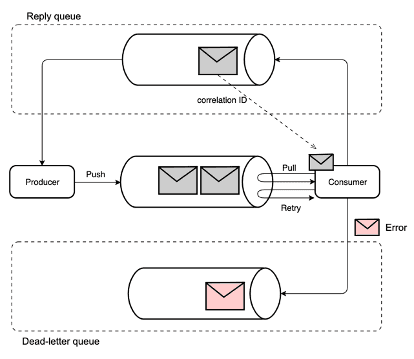

Long-running work

- Processing at the consumer might take very long (minutes, hours…).

- Processing at the consumer may require reliable, one-time outcomes (like execution of payments). We cannot afford timeout errors in the middle of the process, which might end up with partially completed requests.

- Producers entrust jobs into a queue. They will be completed at some point.

- Consumers negotiate the outcomes, including any required retries due to intermittent failures with the queue.

- Bad jobs are moved into a dead-letter queue for inspection

- Flow back to the producer is performed through a reply queue, decoupled from the request queue.

Load Leveling

- Queues/Buffers act as an inbox for requests to a consumer.

- Consumer pulls work when it has capacity for processing.

- Consumer processes at its own pace, without ever getting overloaded.

- This also means that work might be slower on execution.

Load balancing and autoscaling

- Queue length gets observed. If goes over certain threshold, more consumers can be added to auto-scale based on true load. Also the same logic can be used to scale down.

- Multiple consumers compete for messages.

- Each consumer only pulls work when it has capacity for processing.

Consecuences and potential problems

- Not suitable for applications where synchronous communication is needed (immediate response to continue execution)

- Data integrity and consistency might be a challenge, since different consumers have different state (i.e. DB R/W operations slower than system messages).

- There may be no acknowledgement of successful message delivery, and atomic transactions may not be possible.

- Messages queues might introduce latency, bandwidth consumption, and other resources, which may affect performance or efficiency of the system. Trade-offs have to be made.

- Messages might be stored by intermediaries, so privacy policies has to be revisited.

- Queues might need backup configurations for the highest standards of reliability.